Charles Stross jots down some rules that govern contemporary politics … I cringe because I think they may be true … and the comments only make it worse. :/

Charles Stross jots down some rules that govern contemporary politics … I cringe because I think they may be true … and the comments only make it worse. :/

I like good stories and came across Dracula Untold. I didn’t like it very much. Maybe it’s because of my heightened sensitivity for anti-islamic racism. Or maybe it’s because the main theme of the movie seems to be that: it’s OK to join the forces of evil as long as your intention is to protect your family and your country … if that makes sense to you, it doesn’t to me.

They try to accomplish this by twisting the historic context both with regards to the time and place, the persons involved and in the loyalties they had. Also they try to convey that Evil is not something despicable in itself, but a tool to be used by the powers in charge.

I assume you’ve seen the movies and can relate tho following facts to the plot and the characters.

My first pain point are the movie’s extremely distorted “Vlad” and “Mehmet” figures. They are created from greatly mixing Vlad II …

… and Vlad III.

And by greatly mixing Murad II …

… and Mehmet II.

My second and more general pain point are the movie’s morals which are kind of strange to say the least. :/ Among those seem to be:

I find this extremely troubling. o_O

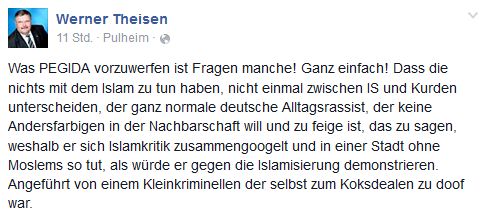

Ich glaube Herr Theisen trifft es ganz gut. 😀

Trotzdem ist “ganze Ding” sehr eigenartig. :/

Der französische Redner auf der Bühne kann sich nicht vorstellen “dass unter uns Rassisten sind”, während ein Chorleiter aus Würzburg alle Muslime erschießen will. Solche Widersprüche fallen bei Pegida nicht auf. Da weht die Fahne der rechtsextremen “German Defense League” friedlich unweit der israelischen im Dresdner Abendwind. Da ist quer durch den Wutbürgerkatalog für jeden etwas dabei zum “Jawoll”-Schreien – auch das Gegenteil. Hauptsache, irgendwas ist schlecht und jemand anderes hat Schuld.

— Telepolis

Aber eins ist klar, Deutschland hat ein massives Rassismussproblem!

Und für alle, denen das alles zu viel Text ist, gibt es istdasabendlandschonislamisiert.de. 😀

Die Geschichten um diesen Edja Snodow sind wie das Spiegelbild einer Welt, die man nicht haben will.

https://twitter.com/riyadpr/status/543043457766653952

Human Rights Watch has examined about 500 U.S. trials related to terrorism and came to a “shocking” conclusion.

So this means that at least 50% of cases where they were “confident” enough to even go to trial fall flat on their faces when taking a closer look. :/

It’s sad that it takes 120+ scholars to refute a bunch of lunatics. As we all know ISIL stands for „Impostors, Sadists and Immoral Lunatics.“

https://twitter.com/riyadpr/status/518732469089353729

https://twitter.com/moonpolysoft/status/510993148302983168

In 1972 the Club of Rome commissioned a study on growth trends in world population, industrialisation, pollution, food production, and resource depletion which was eventually published as a book called “The Limits to Growth.” They simulated different scenarios predicting what would happen until 2100 depending on whether humanity takes decisive action on environmental and resource issues. 40 years later the world pretty much matches the worst prediction.

An awesome talk with Bruce Schneier and Julian Sanchez. Asking just the right questions and giving and eyeopening view into what is possible even with todays technology.

https://youtu.be/JbQeABIoO6A